Hot spot in the data center: causes and effects

Home » Data Center » Hot spot in the data center: causes and effects

EOLIOS takes care of your data center:

- Analysis of overheating risks

- Searching for hot spots

- Creating digital twins

- Optimization of blower temperature

- Study of the impact of air masks

- Sizing climate control systems

- Transient shutdown study

- Creating digital twins

- A passionate team

Continue navigation :

Our latest news :

Our projects :

Our areas of expertise :

Technical files :

What temperature in a data center?

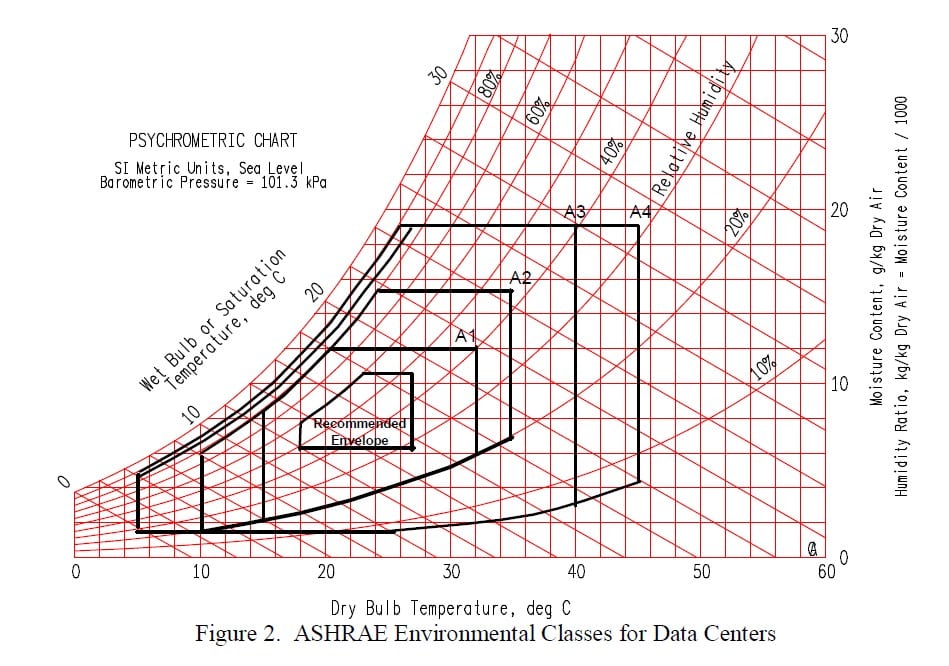

Ashrae standard

The Ashrae (American Society of Heating, Refrigerating and Air-Conditioning Engineers) data center temperature standard is a standardized recommendation for temperature management in data centers. This standard is regularly updated to take account of technological advances and industry best practices.

The Ashrae standard recommends a temperature range for data centers, rather than a specific temperature. The typical recommended range is between 18°C and 27°C (64°F and 81°F). This provides flexibility for data center operators, who can adjust the temperature to suit their specific needs.

One of the main aims of this standard is to optimize the energy efficiency of data centers while maintaining appropriate conditions for equipment operation. Temperatures that are too high can cause components to overheat and systems to fail, while temperatures that are too low can increase the cooling system’s energy consumption .

Hot spots in data centers

Explanation of the main thermo-aerodynamic phenomena

Hot spots in a data center are defined by ASHRAE TC 9.9 as areas where the air entering servers, storage systems, routers or other electronic equipment will be above 27°C. The rear area of the racks and areas in hot aisles are not considered hot spots. Hot spots can reduce reliability and damage electronic equipment due to the inability to dissipate the heat generated.

Server and hardware manufacturers may justify denial of warranty service for breach of service contract terms by the presence of hot zones.

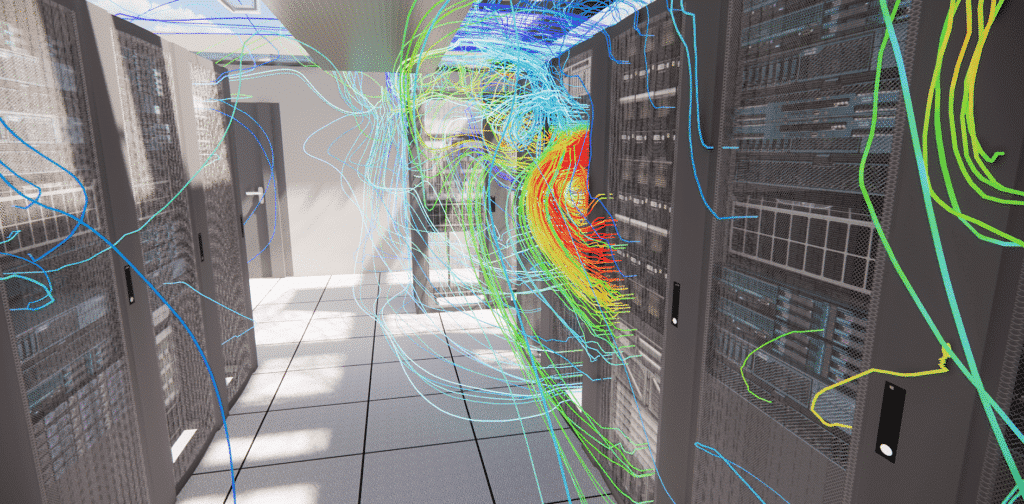

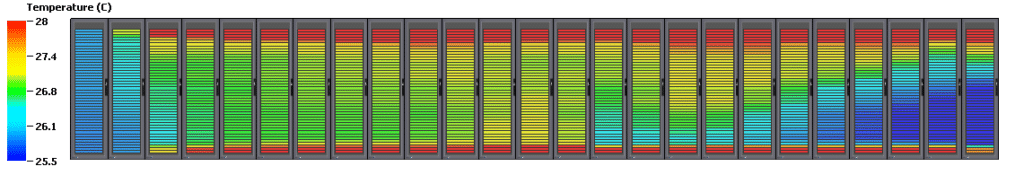

CFD simulation of a data center with generalized overheating (no partitioning)

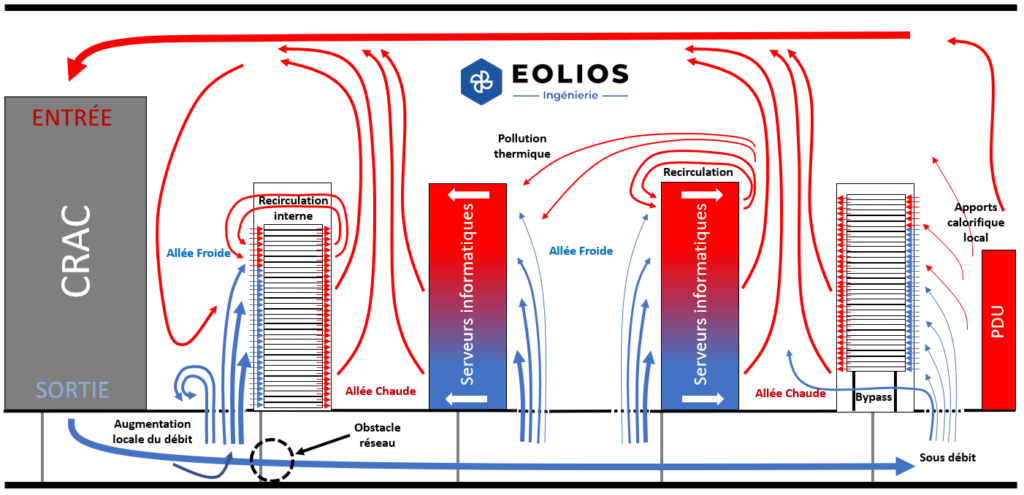

What kind of parasitic air inlets can there be in a data center?

These are usually unsealed cable entries or cable duct entries from the computer room, air duct slots , slots at the bottom of load-bearing supports or simply holes in walls or doors. In the case of the raised floor itself, there may be openings for cable entry, openings under cabinets, redundant perforated plates that may not be located in cold corridors, openings in the floor around the computer room (behind air control units, under power distribution units).

Excessive cooling capacity in a data center can be detrimental to

Surprisingly, a lack of cooling capacity is not the only cause of hot spots. Excessive cooling capacity can lead to hot spots. How can this happen?

If 8 cooling units are needed to cool the data center computer room, and 10 or 12 are installed and functioning, where, of course, each of the 10 or 12 units will operate with less power (than when only 8 units of cooling would have worked). This leads to a decrease in air supply and load, which in turn leads to additional hot zones in areas with low static pressure (dead zones).

Eliminating hot spots in the data center

Protocol for reducing hot spots

The elimination of hot spots in a data center can be achieved in several stages:

1. Temperature monitoring: Use temperature sensors to monitor and identify hot spots in the data center. This will enable early detection of overheating problems.

2. Efficient server layout: Ensure optimum server layout, maximizing the space between them to allow better air circulation. Avoid clutter and obstacles that could impede cold air circulation.

3. Use cooling racks: Use cooling racks for servers that generate excessive heat. These racks can dissipate heat more efficiently and allow better air circulation.

4. Using cable management: Organize cables in an orderly fashion so that they don’t block airflow. Poorly organized cables can create hot spots.

5. Optimize ventilation and airflow: Ensure good ventilation in the data center. Ensuring good airflow through the data center will help eliminate hot spots.

To ensure proper air circulation in the date center, only the required number of perforated tiles need be installed in the cold corridors of the data center. Raised floor tiles should not be located in hot aisles or in unused space in the computer room. To calculate the number of perforated tiles required in the design of a data center, it is necessary to refer to the CFD studies of dimensioning.

The location of the diffusion grids must correspond to the thermal load of each cabinet. General guidelines for placement of slabs are shown in the table below.

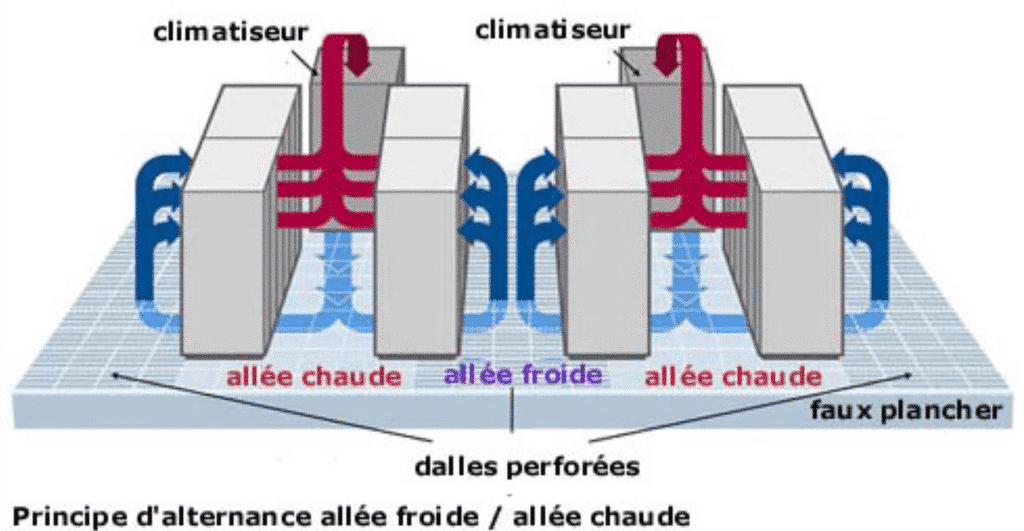

Implementation of hot and cold aisles

The hot and cold aisles of a data center are concepts for managing air circulation to maintain an optimum temperature inside the data center.

The cold aisle is the space where cool air is introduced into the data center. IT equipment, such as servers, are positioned so as to face this cold aisle, in order to capture and benefit from the fresh air to cool their internal components.

The hot aisle, on the other hand, is the space where hot air is extracted from the data center. The data center’s air-conditioning and cooling systems evacuate the hot air from this aisle, using equipment such as cooling units, fans and heat exhaust ducts.

The aim of separating the hot and cold aisles is to maximize cooling efficiency by minimizing mixing between the two types of air. This reduces the energy consumption needed to maintain the right temperature, and improves the performance and lifespan of IT equipment.

This concept of separating hot and cold air optimizes data center energy costs by minimizing energy losses associated with the mixing of hot and cold air. It also maintains an even temperature throughout the data center, reducing the risk of equipment overheating.

Why run a CFD simulation of a data center?

CFD simulation provides information on the relationship between the operation of mechanical systems and changes in the thermal load of IT equipment. With this information, IT and site staff can optimize airflow efficiency and maximize cooling capacity.

It is also necessary to seal the cabinets of unused equipment. This involves installing blank blank blanking panels to cover any open space in the rack not occupied by the equipment. Also seal all side gaps between mounting rails and cabinet walls, openings at the bottom and top of the cabinets. Do not allow hot air to recirculate from the back of the cabinet to the front.

Free up as much space as possible under theraised floorfor better air circulation. Remove unused cables, debris, etc.

To check if everything has been done correctly, measure the temperature at the entrance of the server, especially on the top rows of the telecommunication cabinets. This can be done using a variety of thermometers: a non-contact IR thermometer; LCD strip thermometers or wireless RF temperature monitors. If the intake air is too cold or too hot, perforated plates can be added, moved or replaced. This check should be done regularly, especially when new equipment is installed or old equipment is dismantled.

Airflow simulation and CFD analysis

To benefit from all these measures, it is necessary tocarefully study the thermal load, the capacity of the cooling equipment and the airflow in the data center’s computer room.

Of course, additional costs will be incurred, but these costs are justified. According to statistics from experts involved inoptimizing data center air-conditioning systems, the minimum reduction in energy consumption thanks to the above actions is between 10% and 36%.