Understanding how a Data Center works

Home » Data Center » Understanding how a Data Center works

EOLIOS, unique know-how in Europe

- A passionate team

- Exclusive estates

- All sectors

- Data Center

- Proven expertise

- Data center audits and diagnostics

- CFD engineering for data centers

- Thermal study of technical premises

- Generators

- Designing your data center’s digital twin

- Urban heat island impact study for data centers

- External CFD simulation for data center

- Energy optimization and PUE calculation for data centers

- Data Center Fire Simulation

- Data center audits and diagnostics

- CFD engineering for data centers

- Thermal study of technical premises

- Generators

- Designing your data center’s digital twin

- Urban heat island impact study for data centers

- External CFD simulation for data center

- Energy optimization and PUE calculation for data centers

- Data Center Fire Simulation

Our projects :

Understanding how data center air conditioning works

With the increase in the amount of information and the degree of computerization of work processes, the question of the security of this information during the uninterrupted operation of servers is becoming more and more acute. A failure in this area can suspend all company activities and lead to serious losses. One of the key requirements for stable server operation is maintaining the optimum air temperature in the server room volume, which is achieved by using special systems based on precision air-conditioning systems.

Operating a data center is energy-intensive, and the cooling system often consumes as much (or more) energy as the computers it supports.

In this article, we’ll look at some of the most commonly used data center cooling technologies, as well as new approaches to CFD simulation.

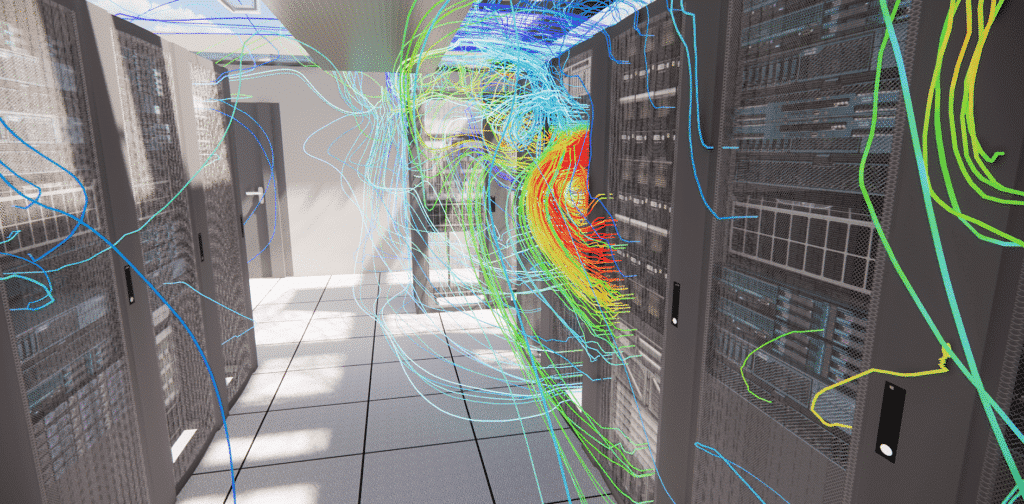

Cold aisle / hot aisle design

This is a data center rack layout, using alternating rows of “cold aisles” and “hot aisles”.

In front of the racks are cold air diffusers (usually via grills) for the servers to draw in air, then hot aisles remove heat from behind the servers. The ventilation ducts are usually connected to a false ceiling taking the warm air from the “hot aisles” to be cooled, then the cooled air evacuated in the “cold aisles”, via a false floor or ducts (see loose for some designs).

Empty server racks should be filled with blanking panels to prevent overheating and reduce the amount of wasted cold air. Indeed, the vacuum created by the absence of servers can lead to parasitic air transfers given the pressure differences between hot and cold areas. This stray air movement is wasted energy.

Chilled water system

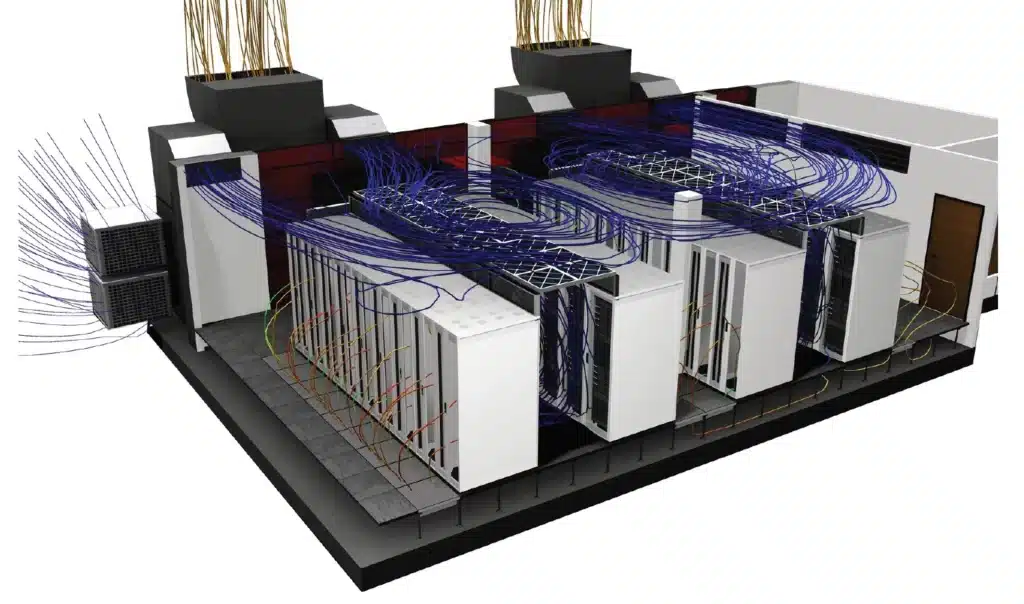

This technology is most commonly used in medium to large-scale data centers.

The air in the data center is supplied by air handling systems, known as computer room air handling systems (CRAH), and chilled water (supplied by a cooling system external to the facility) is used to cool the air temperature.

What's the difference between CRAC and CRAH units?

CRAC units

- Use refrigerant

- Compressor required

CRAC units operate like home air conditioning units . They have a direct expansion system and compressors built right into the unit. They provide cooling by blowing air over a cooling exchanger filled with refrigerant. The refrigerant is kept cold by a compressor inside the unit. The excess heat is then expelled by a mixture of glycol, water or air. While most CRAC units typically provide only a constant volume and modulate only on/off operation, new models are being developed that allow for variations in airflow.

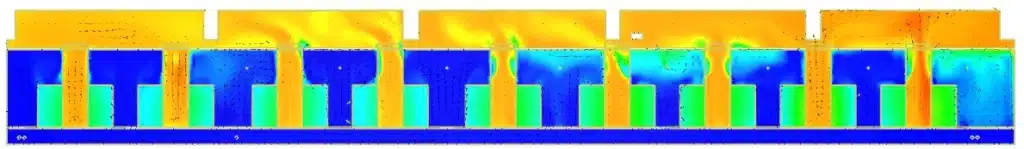

There are different ways to place CRAC units, but they are typically installedopposite the hot aisles of a data center. There, they release cooled air through the perforations in the raised floor (grids, or floor tile perforations), cooling the computer servers.

CRAH units

- Use chilled water

- Have a control valve

CRAH units operate like chilled water air handlers installed in most office buildings. They provide cooling by blowing air over a cooling exchanger filled with chilled water. Chilled water is usually supplied by “Water Chillers” – otherwise known as a chilled water plant. CRAH units can regulate fan speed to maintain a set static pressure, ensuring that humidity levels and temperature remain stable.

Chilled water can be produced by direct expansion or by the much more energy-efficient adiabatic DRY cooling system.

What is the optimum temperature for a data center?

Server rooms and data centers contain a mixture of hot and cold air – server fans expel hot air during operation, while air conditioning and other cooling systems bring in cool air to counteract any hot exhaust air. Maintaining the right balance between hot and cold air has always been critical to keeping data centers available. If a data center gets too hot, the equipment is at a higher risk of failure. This failure often results in downtime, data loss and lost revenue.

In the 2000s, the recommended temperature range for the data center was 20 to 24°C . This is the range that the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) has recommended as optimal for maximum equipment availability and life. This range allowed for better utilization and provided enough buffer space in case the air conditioning failed .

Since 2005, new standards and better equipment have become available, as have improved tolerances for higher temperature ranges. ASHRAE has actually now recommended an acceptable operating temperature range of 18° to 27°C.

The rise in server input temperatures also makes the use of free cooling or free chilling (systems that use outside air to blow fresh air into the room or cool the water instead of the chiller) much more attractive, especially in temperate regions like France. Indeed, with temperature setpoints of 25° C in the room, instead of 15° C, the periods of the year during which free cooling can be used, without activating the air conditioning, are considerably longer. This generates significant energy savings and an improvement in PUE (Power Usage Effectiveness). The same goes for free chilling, which can be used more frequently during the year, to cool the water loops, the temperature set points now being set at 15 ° C instead of 7 ° C for water. .

What are the problems associated with too high a setpoint temperature in a data center?

Unfortunately, higher operating temperatures can reduce the response time in the event of a rapid temperature increase due to a cooling unit failure. A data center containing servers operating at higher temperatures is at risk of instant simultaneous hardware failures . Recent ASHRAE regulations emphasize the importance of proactive monitoring of environmental temperatures inside server rooms.

What happens if it gets too hot?

When the temperature inside the data center rises too high, the equipment can easily overheat. This can damage servers. Data could be lost , causing major problems for businesses that rely on data center services. That’s why all data centers must have cooling systems that can withstand a crisis or maintenance period.

What happens if the air conditioning systems break down?

Depending on the installed power density, the increase in air temperatures inside the server room can be extremely rapid. We generally observe during simulations of power failure, a rise in temperature of the order of 1°C per minute. This results in a significant risk of hardware degradation and data loss if the redundancy and security systems are not properly dimensioned. On the other hand, the restart time and full power activation of the compressors of the air conditioning systems is an issue for the most demanding halls. In order to delay the effects of temperature rise, there are inertia systems that store heat energy for a few minutes to smooth the temperature rise curve.

Why run a CFD simulation of a data center?

CFD simulation provides information on the relationship between the operation of mechanical systems and changes in the thermal load of IT equipment. With this information, IT and site staff can optimize airflow efficiency and maximize cooling capacity.